The most basic optimization problem is

We call a design point. Design points can be represented as a vector of values. The function is called the objective function. The set is called the feasible set. The goal is to find the that minimizes the objective function. We call such a solution or minimizer. Often optimization problems have constraints which provides limitations to the feasible set. For example,

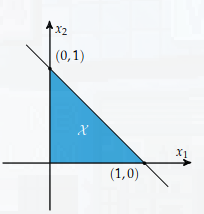

has the feasible set shown in the figure below.

One simple approach to optimization of a function is to look at the set of critical points that lie inside the feasible set. Unfortunately, it is not always easy to tell if a critical point is a global minimum; typically we can only check if it is a local minimum, i.e., there is some such that for all such that .

One simple approach to optimization of a function is to look at the set of critical points that lie inside the feasible set. Unfortunately, it is not always easy to tell if a critical point is a global minimum; typically we can only check if it is a local minimum, i.e., there is some such that for all such that .

Local minima fall into two categories: strong and weak. A strong local minima, also known as a strict local minima, is a point such that for some it follows that whenever and .

Note that while a derivative of zero is a necessary condition for a local minima, it is not sufficient as the point may be an inflection point.

Univariate

A necessary condition for a design point to be a local minimum is that

- , the first-order necessary condition (FONC)

- , the second-order necessary condition (SONC).

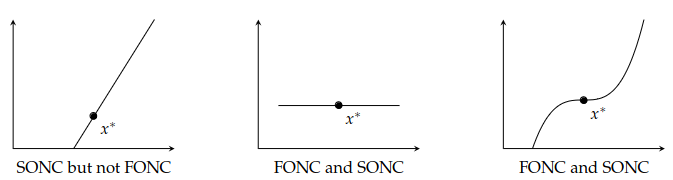

For local maximum we simply SONC to . While these conditions are necessary, they are not sufficient. This can be seen in the three functions below:

These conditions can be derived via Taylor expansion. Let be a local minimum. Notice that

These conditions can be derived via Taylor expansion. Let be a local minimum. Notice that

If is a local minimum, then

Therefore, combining these two gives us that

As for the second-order necessary condition, notice that

By the first-order condition,

Multivariate

We get similar necessary conditions for to be a local minimum of a multivariate function:

- , the first-order necessary condition (FONC)

- for all , the second-order necessary condition (SONC).

Recall that for scalar-valued differentiable function , the gradient is the vector field

Given a function , the Hessian matrix is is the matrix of second-order partial derivatives

Thus, the SONC is checking when the Hessian matrix is positive semi-definite.

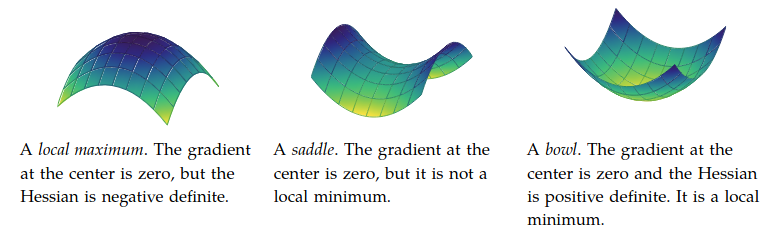

Some example cases are given in the figure below.