Linear programming involves solving optimization problems with linear objective functions and linear constraints. The typical LP problem is formed as follows:

We typically use matrices to represent linear programs in general form:

We can convert general form linear programs to standard form:

We convert into . We then split into two constraints: and . Next, to ensure all entries are nonnegative, we replace with and constrain and giving us

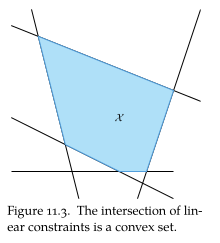

Each inequality forms a half-space. The collection of inequalities forms a convex set.

As a result, any local feasible minimum is also a global feasible minimum.

Equality Form

We often represent linear programs in equality form:

where and have components, is an matrix, and has components, i.e., there are nonnegative design variables and a system of equations.

Any linear program in standard form can be transformed to equality form by changing the constraints:

where is called a slack variable that enforces equality. For example, consider the following linear program:

by introducing two slack variables (one for each constraint) and splitting , we get

which is in equality form.

Simplex Algorithm

The simplex algorithm is an algorithm for solving linear programs in equality form by moving between vertices of the feasible set. We assume the rows of are linearly independent and the number of equality constraints is at most the number of design variables , i.e., the problem is not over constrained.

Linear programs in equality form have feasible sets that form a convex polytope. Points on the interior of the feasible set are never optimal since we can improve them by moving in the direction. Moreover, points on the faces of the polytope can only be optimal if the face is perpendicular to . Finally, vertices have the potential to be optimal.

The simplex algorithm searches over the feasible set’s vertices for the optimal vertex. We can represent every vertex be uniquely defined components of that equal zero. For example, if , then

uniquely defines a point.

We partition the component indices, , into two sets, and , such that

- The design values associated with are zero )

- The design values associated with may be zero ()

- has exactly elements and has exactly elements. We define to be the vector consisting of components of that are in , likewise is the vector consisting of components of that are in (note ).

We then find the vertex associated with partition by using the matrix formed by taking the columns of selected by . We then get .

Example: Consider the constraints

We want to verify that is feasible and that it has no more than three nonzero component. Notice that

is invertible. Moreover,

Therefore, is a vertex of the feasible set polytope. Note we could also have used .

While every vertex has an associated partition , not every partition corresponds to a vertex. A partition corresponds to a vertex only if is a nonsingular and is feasible.

The simplex algorithm has two phases:

- An initialization phase which identifies a vertex partition

- An optimization phase which transitions between vertex partitions toward a partition corresponding to an optimal vertex.

First-Order Necessary Conditions

Using the Lagrangian for the equality form gives us

with the following FONCs:

- feasibility: ,

- dual feasibility:

- complementary slackness: (element-wise product)

- stationary:

For linear programs, the FONCs are sufficient conditions for optimaility.

We can decompose the stationary condition into and components:

Choosing satisfies the complementary slackness. As a result we get that

Plugging this in to our stationary equation gives us that

If contains negative components, then dual feasibility is not satisfied and the vertex is sub-optimal.

Optimization Phase

At this point of the algorithm we have a partition that corresponds to a vertex of the feasible set polytope. We can update the partition by swapping indices between and .

A transition between vertices must satisfy . We choose a entering index . Then the new vertex must satisfy

The index replaces a leaving index and becomes zero in . We call such a swap pivoting. We then can solve for the new design point

In particular we want when the leaving component is zero, i.e., . Solving for gives us

We pick the leaving index through the minimum ratio test: select the leaving index that minimizes . With the leaving index selected, we swap and between and .

def edge_transition(A, c, b, B, q):

n = A.shape[1]

b_inds = sorted(B)

n_inds = # indices not in B

AB = A[:,b_inds]

ABinv = np.linalg.inv(AB)

d = np.matmul(ABinv, A[:,n_inds[q]])

xB = np.matmul(ABinv, b)

# Pick the leaving index p that minimizes x'_q

p, xq = 0, np.inf

for i in range(d):

if d[i] > 0:

v = xB[i] / d[i]

if v < xq:

p, xq = i, v

return (p, xq)Different heuristics can be used to select an entering index :

- Greedy, choose the that maximally reduces

- Dantzig’s rule, choose with the most negative entry in .

- Bland’s rule, choose the first with a negative entry in .

Example: Consider the equality-form linear program with

and the initial vertex defined by . We first extract :

and compute :

and :

Notice that has a negative element, so is sub-optimal. We pivot on the index of the negative element, . Using edge transition we get that gives the minimal . Thus, we update our set of indices to , ending the first iteration.

Upon the second iteration, we find the indices is optimal as has no negative entries.

Initialization Phase

Before performing the optimization phase, we need an initial partition corresponding to a vertex. We can find such a partition by solving an auxiliary linear program:

where is the diagonal matrix whose diagonal entries are given by

We solve the auxiliary linear program with a partition that selects only the -values. As a result, the corresponding vertex has and each -element as the absolute value of the corresponding -value.

Example: Consider the following equality-form linear program:

we can identify a feasible vertex by solving

with an initial vertex defined by . The initial vertex has

and thus, . From here we can then begin the optimization phase to get the feasible vertex in the auxiliary problem, or in the original problem.