Derivatives

The derivatives of a univariate function is the rate at which the value of changes at . It is often visualized as the tangent line to the graph at . The derivative is a useful tool for providing a linear approximation of the function by stepping along the tangent line:

The limit equation of the derivative has three variants.

- Forward difference:

- Central difference:

- Backward difference:

Derivatives in Multiple Dimensions

The gradient is a generalization of the derivative to multivariate functions. The gradient of at , denoted is the vector

that points in the direction of steepest ascent of the tangent hyperplane.

The Hessian of a multivariate function is the matrix containing all the second-order partial derivatives.

The directional derivative measures the rate of change in a particular direction at a point . It is defined by a vector variation of the difference quotient.

It can be computed by taking the dot product with the gradient,

The directional derivative is maximal in the direction of the gradient and minimal in the direct opposite direction of the gradient.

Numerical Differentiation

Numerically we often approximate the derivative of a function. One such method is finite difference methods where we simply evaluate the difference quotient for small , i.e.,

The smaller is the better the approximation gets. We can find the error term by looking at the Taylor expansion of about :

Solving for gives us

Thus, the forward difference approximates the true derivative with an error term that is . One may show that the central difference method is slightly better with an error term of .

Complex Step Method

Finite difference methods run into the issue of choosing a suitable -value. Too small results in floating point errors and too large results in poor approximations. In complex step method we evaluate after taking a step in the imaginary direction:

If we take the imaginary component and solve for we get that

If we instead took the real part we can approximate :

Hence, with a single evaluation we can approximate both and .

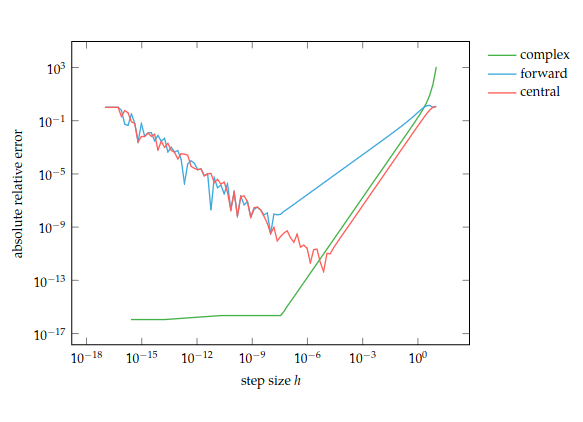

Below is a chart comparing the absolute relative error of complex step, forward difference, and central difference for At for various step sizes :

Automatic Differentiation

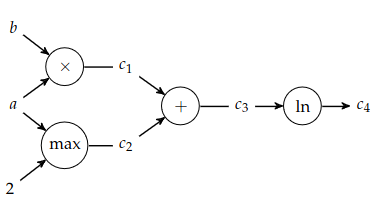

Imagine taking the partial derivative of the function wit h respect to . Doing so requires applying the chain rule several times. We can automate the process with a computational graph which represents a function with a graph where nodes are operations and edges are input-output relations.

There are two methods for automatically differentiating a function using its computational graph, forward accumulation and reverse accumulation.

Forward Accumulation

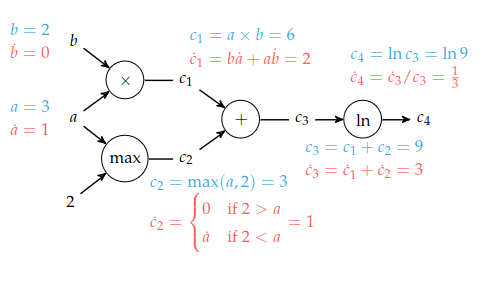

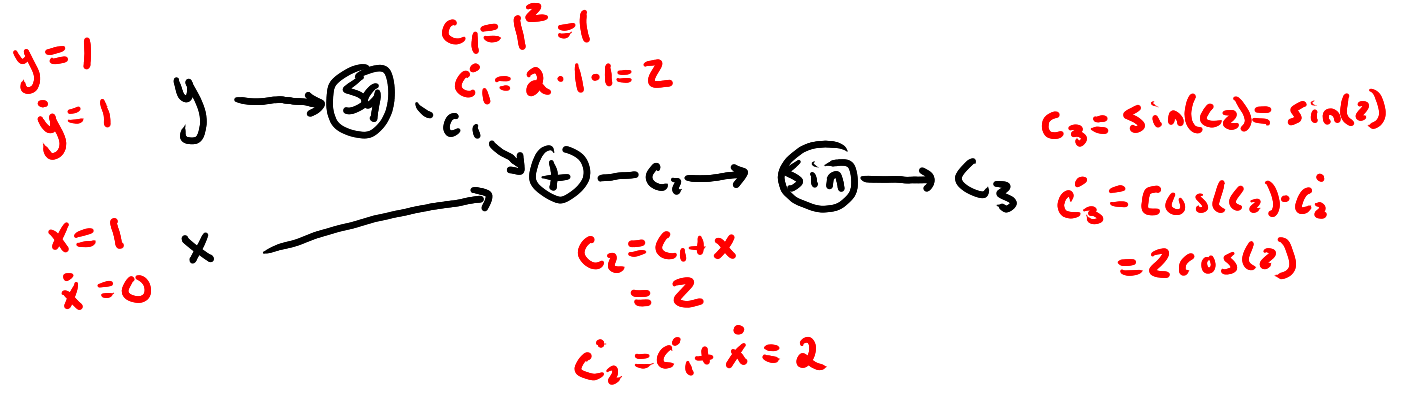

To demonstrate forward accumulation consider at , .

We start at the computational graph’s source nodes consisting of function inputs and constant values. For each node we find both the value and the partial derivative.

Next, we proceed down the tree one node at a time. We compute the value and local partial derivative using the previous nodes’ values and partial derivatives.

When we are done, we get both and .

It is often beneficial to express the value and partial derivative as a dual number. A dual number is, like complex numbers, written in the form where and by definition. We can add and multiply dual numbers:

Let be a smooth function. Notice that if we use the Taylor series,

Encoding the function value and partial derivative.

Exercises

Exercise 2.1. Adopt the forward difference method to approximate the Hessian of using its gradient, . Answer: Recall that the -th column of is given by

We can approximate this vector by using forward difference method on :

where is the the vector with a 1 in the -th position and zeroes elsewhere.

Exercise 2.5. Draw the computational graph for . Use the computational graph with forward accumulation to compute at . Label the intermediate values and partial derivatives as they are propagated through the graph. Answer:

We get that and .

We get that and .

Exercise 2.6. Combine the forward and backward difference methods to obtain a difference method for estimating the second-order derivative of a function at using three function evaluations. Answer: